CONTACT US

What is Chain of Thought Prompting? How to Get Better Results from Your LLM Prompts

In this article, we explore the concept of Chain of Thought Prompting for Large Language Models (LLMs), a technique that can unlock the true potential of LLMs. As the field of natural language processing (NLP) advances, LLMs have emerged as powerful tools for various applications. These models have the potential to revolutionize how we interact with technology to provide solutions to complex problems. However, effectively utilizing LLMs can be challenging, especially when trying to achieve a specific kind of result from an LLM prompt.

What is Chain of Thought Prompting?

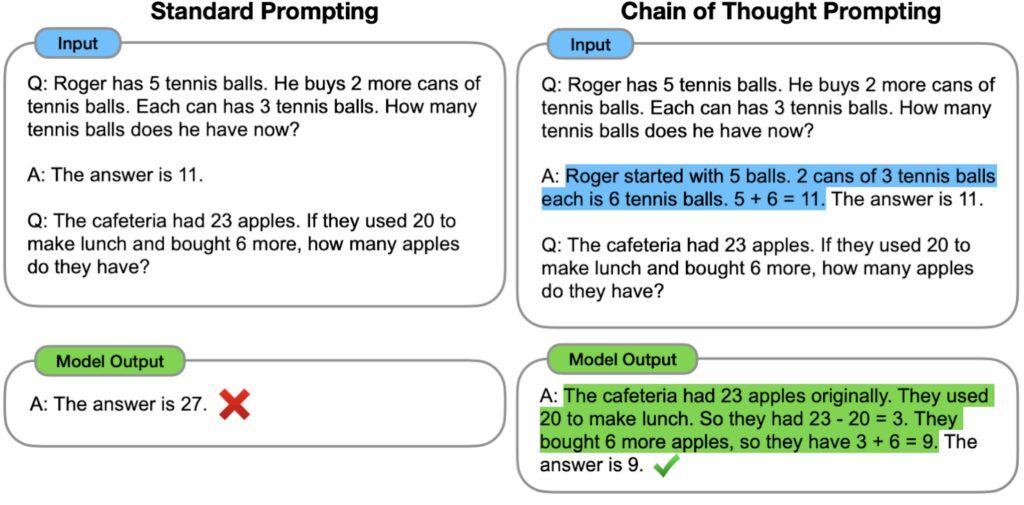

Chain of thought prompting is a technique that involves breaking down complex queries or tasks into a series of interconnected prompts. Instead of relying on a single input, the model is guided through a sequence of prompts that refine and build upon each other. By doing so, the model can better understand the user’s intent and produce more accurate and contextually relevant outputs.

LLMs such as OpenAI’s GPT have the ability to generate human-like text in response to human provided input. These models have been trained on a vast amount of data, enabling them to understand context and generate coherent responses. They are widely used for language translation, content creation, chatbots, and more. However, interacting with LLMs can sometimes feel like talking to a highly intelligent but often unpredictable partner.

The Challenge of Effectively Using LLMs

As I mentioned in my previous article What is Prompt Engineering, LLMs require appropriate prompts to produce accurate and relevant responses. Crafting the right prompt is crucial, as it sets the context and influences the model’s output. Traditional single-shot prompts often lead to incomplete or erroneous responses. This is where Chain of Thought Prompting comes into play.

How Chain of Thought Prompting Works

- Initiate the Chain: Begin the interaction with a general prompt that introduces the context of the conversation or task.

- Refine and Specify: In the subsequent prompts, gradually refine the instructions, providing more specific details or questions. This helps narrow down the possible interpretations and steers the model in the desired direction.

- Adapt and Learn: Throughout the chain, the model’s responses can be used as input in the next prompt. This adaptive learning process enables the model to maintain context and build upon previous responses, resulting in more coherent and accurate answers.

- Ensure Clarity and Consistency: Keep the prompts clear and concise, avoiding ambiguity. Consistent wording and context reinforcement help the model to stay on track and deliver reliable results.

Benefits and Best Practices

Chain of Thought Prompting offers several advantages when using LLMs effectively:

- Improved Accuracy: By guiding the model through a sequence of prompts, you increase the chances of obtaining accurate and relevant responses.

- Enhanced Control: Chains provide a structured way to interact with LLMs, allowing for better control over the output and reducing the risk of unintended results.

- Context Preservation: Adaptive learning in chains ensures that context is preserved throughout the conversation, leading to more coherent and meaningful interactions.

- Efficiency: Despite the sequential nature of chains, this technique can streamline the process and save time by eliminating the need for multiple isolated queries.

Incorporating Chain of Thought Prompting into your prompt engineering can be highly beneficial when fine tuning the desired functionality required from an LLM. With this technique, users can harness the full potential of these language models while ensuring accuracy and context in their interactions.

Conclusion

As a whole, understanding Chain of Thought Prompting is essential for anyone seeking to effectively use large language models, particularly for engineering use cases. By breaking down complex queries and tasks into a series of interconnected prompts, users can improve the accuracy and control of LLMs, making them invaluable tools for various applications. As the technology continues to evolve, embracing innovative techniques like Chain of Thought Prompting will be crucial for unlocking the true potential of LLMs.

Related Articles

- AI in Drug Discovery – Harnessing the Power of LLMs

- What is ChatGPT? A Complete Explanation

- Domo custom apps using Domo DDX Bricks with the assistance of ChatPGT

- Resolution for various ChatGPT errors, including ChatGPT Internal Server Error

- Understanding Large Language Models (LLMs)

- What is Prompt Engineering? Unlock the value of LLMs

- What are Graph Algorithms?

- What is Neo4j Graph Data Science?

- What is ChatGPT? A Complete Explanation

- ChatGPT for Analytics: Getting Access & 6 Valuable Use Cases

- Understanding Large Language Models (LLMs)

- What is Prompt Engineering? Unlock the value of LLMs

- LLM Pipelines / Graph Data Science Pipelines / Data Science Pipeline Steps

- Using a Named Entity Recognition LLM in a Biomechanics Use Case

- What is Databricks? For data warehousing, data engineering, and data science

- Databricks Architecture – A Clear and Concise Explanation

- What is Databricks Dolly 2.0 LLM?