CONTACT US

Translate Visual Networks into Graphs: Unveiling the Power of AI in Deciphering Complex Diagrams

By David Hughes / Engineering & AI Practice Director

February 23, 2024

Blog

Reading Time: 14 minutes

Frequently, we look back at previous work and consider how the meteoric pace and capabilities of GenAI could be leveraged with similar challenges today.

Recently, one of these thought experiments started a conversation here at Graphable regarding the possibility and value of utilizing large language vision models (LLM-V) to evaluate the inherent network of objects and connections encoded in visual knowledge representations.

We asked ourselves, “Could diagrams and other informational visuals be parsed and modeled into a graph?”

Programmatically representing and persisting diagrams, schematics, and logic flows into graph models is a powerful enabler. This capability can accelerate data ingestion and analysis and reduce the time to value proposition of achieving insights from visual data. Manual data entry is error-prone and tedious.

In fact, at scale in large engineering projects or when dealing with voluminous data, manual data entry of visual information is often unrealistic. Further, establishing a digital twin of a visual diagram or schematic can enable simulations and analytics using data ingested against the digital twin model.

We created a program that can automatically parse visual data, model it in a graph schema, and persist it as a knowledge graph. With diagrams converted into graph formats, we can leverage graph-based analysis techniques, such as network analysis, to uncover insights that aren’t easily surfaced in the original visual representations found in diagrams, schematics, or logic flows. This can include identifying critical nodes, analyzing connectivity, and understanding the structure and dependencies within the data.

Further, graph representations of diagrams can facilitate better collaboration and communication among teams, especially in multidisciplinary projects. Graphs can be more intuitive to understand and work with, especially for stakeholders who might not be familiar with the original schematic notations.

The Technology Behind Vision Models

Vision models are advanced multimodal large language models (LLMs) developed to analyze, interpret, and convert visual content into structured, machine-readable representations. These models leverage deep learning techniques to process and understand images, enabling them to extract and contextualize visual information in ways that parallel human visual comprehension.

By integrating visual data processing with natural language understanding, these models facilitate a wide range of applications, from image recognition to generating descriptive narratives of visual content. In this article, we will explore the capability of vision models to parse and understand a network representation and output of a graph-based schema of the objects and relationships in an input image.

The history of programmatically parsing diagrams, schematics, and flow charts is intertwined with the broader development of computer vision, artificial intelligence (AI), and document analysis technologies. Parsing visual representations of documents into a machine-readable format has been a significant challenge due to the effort’s complexity, variety, and contextual nuances. The journey to achieve this capability spans several decades and reflects advancements in computational techniques and technologies.

Early Efforts and Challenges (1960s-1980s)

- Initial Explorations: Efforts dating back to 1870 establish the beginning of optical character recognition (OCR). The earliest attempts at parsing visual documents programmatically began in the 1960s and 1970s, focusing primarily on basic pattern recognition and optical character recognition (OCR) techniques, as demonstrated by projects like GISMO. These efforts were limited by the computational power available at the time and the rudimentary state of image processing technologies.

- Structured Approaches: In the 1980s, researchers began developing more structured approaches like Bayes Smoothing Algorithms for Segmentation of Binary Images Modeled by Markov Random Fields to recognize specific elements within diagrams, such as lines, circles, and text. However, these methods often require highly structured and straightforward diagrams to function reliably.

Advancements in Computer Vision (1990s-2000s)

- Improvements in OCR: The 1990s brought significant improvements in OCR technology, allowing for better text recognition within diagrams. This period also saw the development of more sophisticated image processing algorithms capable of detecting geometric shapes and basic relationships between elements.

- Vectorization Techniques: Efforts were made to convert raster images (pixel-based) into vector images (line-based), facilitating the identification and manipulation of diagram components. This approach can be seen in the effort to extract building features from high resolution satellite images. However, understanding the semantic meaning of these components and their relationships remained challenging.

Rise of Machine Learning and AI (2000s-2010s)

- Machine Learning Models: With the advent of machine learning, especially deep learning in the late 2000s and 2010s, significant progress was made. Convolutional neural networks (CNNs) and later, graph neural networks (GNNs), began to be applied to the problem, offering improved recognition of complex shapes and relationships within diagrams.

- Semantic Analysis: Researchers started to focus not just on detecting elements but also on understanding their semantic roles and grammar within diagrams. This involved parsing and interpreting the functionality and relationships of components in schematics and flow charts, moving beyond mere shape recognition.

Integration of AI and Knowledge Representation (2010s-Present)

- Knowledge Graphs and Semantic Web: Recent efforts have focused on not just parsing diagrams but converting them into structured formats like knowledge graphs. This involves understanding the context and semantics of the diagrams, facilitated by advancements in natural language processing (NLP) and AI.

- Comprehensive AI Systems: The integration of AI systems capable of processing both text and images has led to more sophisticated parsing of diagrams. These systems can understand complex relationships and hierarchies within diagrams, converting them into detailed graph structures or other structured data formats.

Current Trends and Future Directions

- End-to-End Automation: Current research and development efforts aim at creating end-to-end systems that can automatically parse diagrams, schematics, and flow charts with minimal human intervention. This includes using neural networks that learn visual representations from natural language like CLIP which can map visual concepts in images and associate them with their names, to large language models like GPT-4 that can understand the context and content of diagrams.

- Cross-Domain Applications: There is also a growing interest in applying these technologies across various domains, such as engineering, biology, pharmacology, and information technology, to generate knowledge graphs and other structured representations from technical documents automatically.

Deciphering Complex Diagrams Across Industries

LLMs, specifically multimodal vision models, have recently demonstrated significant advances in parsing images. In October of 2023 the paper titled The Dawn of LMMs: Preliminary Explorations with GPT-4V(vision) which reported on the SoTA of GPT-4-V and previous models led us to consider the various use case for vision models beyond summarization, descriptive analytics, and segmentation.

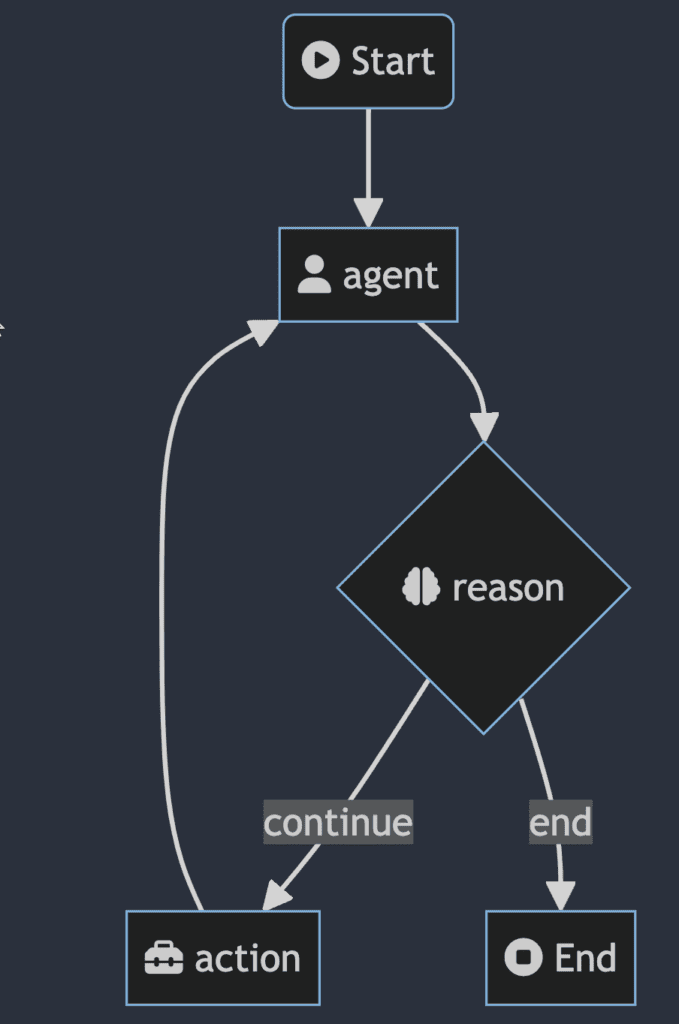

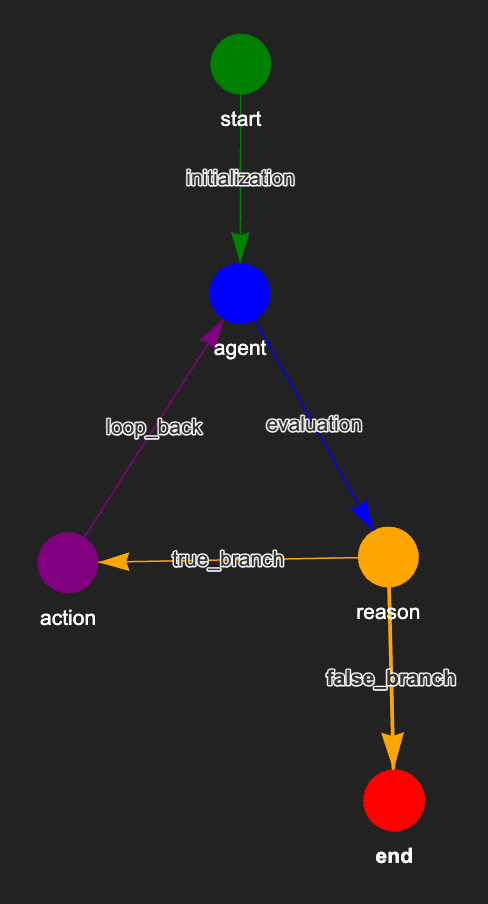

Case Study 1: Application Logic Flow

The first experiment we conducted examined a simple use case of parsing and extracting information from an application logic flow. The visual diagram has only a few objects and connections. This logic flow represents the architecture of an intelligent agent equipped with tools and the ability to reason about its data exchange with an LLM and tool output. We are excited about the features of LangChain‘s LangGraph and have seen powerful results integrating its various design patterns into our work at Graphable.

This experiment was a ‘meta-experiment’ in some sense because what became our D2G program is itself written in LangChain’s LangGraph 🙂

Interestingly, this ‘simple’ diagram did present some initial challenges to the first version of the digram-to-graph (D2G) application we created. Naively, D2G’s instructions to its own internal chains, agents, LLM, and tools were too narrow in considering how objects are represented and labeled. With some refactoring, we were able to achieve accurate results.

In this D2G output, we can see a faithful representation of the original application logic flow. D2G was able to capture the object, labels, and relationships depicted in the visual representation of the original application. Of note, the LLM inferred reasonable labels for the edges/relationships.

Potential Applications:

- Automated Documentation and Knowledge Management: The interpretation of flow diagrams representing software architecture or business processes could support the automatic generation of detailed documentation. This documentation can then be stored in a graph database like Neo4j, providing an easily navigable and queryable knowledge base for new and existing team members.

- Enhanced Software Development and Maintenance: By interpreting logic flow diagrams, LLMs can assist in identifying inefficiencies or bottlenecks in the software development process.

- Compliance and Auditing: In industries with stringent regulatory compliance requirements tools like D2G can enable easier audits of the systems against compliance standards, as auditors can query the graph database to understand how data flows through the system and ensure all regulatory requirements are met.

- Risk Management and Security Analysis: By analyzing application logic flow diagrams, graph models can help identify potential security vulnerabilities or risks, such as improper data handling or potential points of failure.

- Integration Planning and Management: For organizations looking to integrate disparate systems, LLMs can interpret the flow diagrams of these systems and store them in Neo4j. This facilitates the identification of integration points and dependencies, making the planning and management of system integrations more efficient.

- Change Impact Analysis: Before implementing changes in a system, graph models can simulate the impact of these changes by analyzing the current logic flow diagrams and predicting how modifications would affect the overall system.

- Training and Simulation: By interpreting and storing application logic in a graph database, organizations can create simulations or training environments that mimic real-world scenarios. This is particularly useful for onboarding new employees or testing system changes in a controlled environment.

- Business Process Optimization: LLMs can analyze business process flow diagrams to identify redundancies or inefficiencies.

- Customer Experience Mapping: For businesses focused on improving customer experience, LLMs can interpret customer journey maps persisted in graphs. This allows businesses to analyze and optimize touchpoints and interactions to enhance overall customer satisfaction. This capability extends into patient journey maps and healthcare delivery.

- Strategic Decision Support: By providing a comprehensive and queryable model of an organization’s processes, systems, and workflows in Neo4j, decision-makers can leverage this data to make more informed strategic decisions, anticipate future challenges, and identify new opportunities.

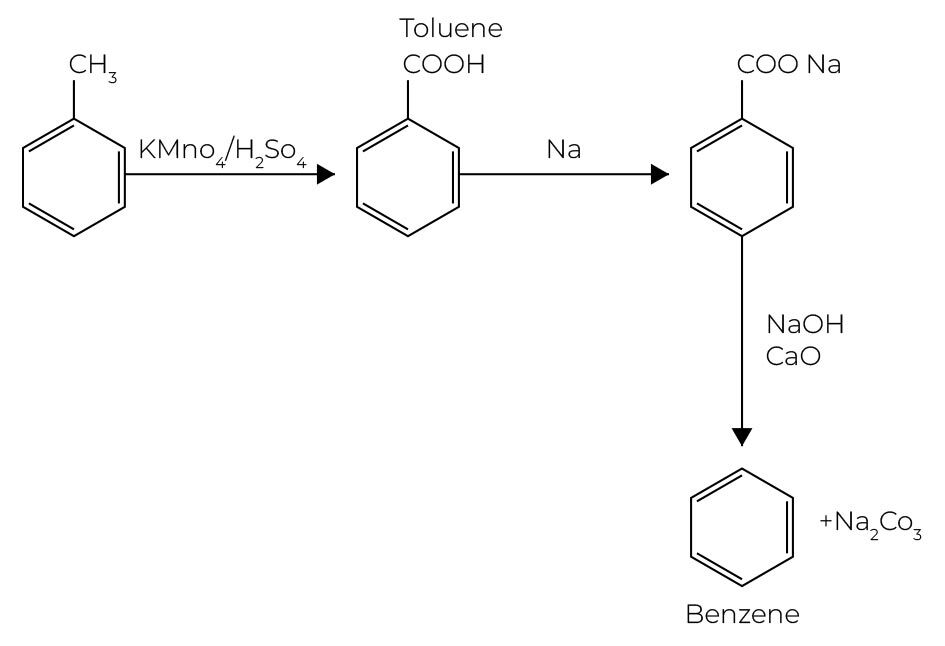

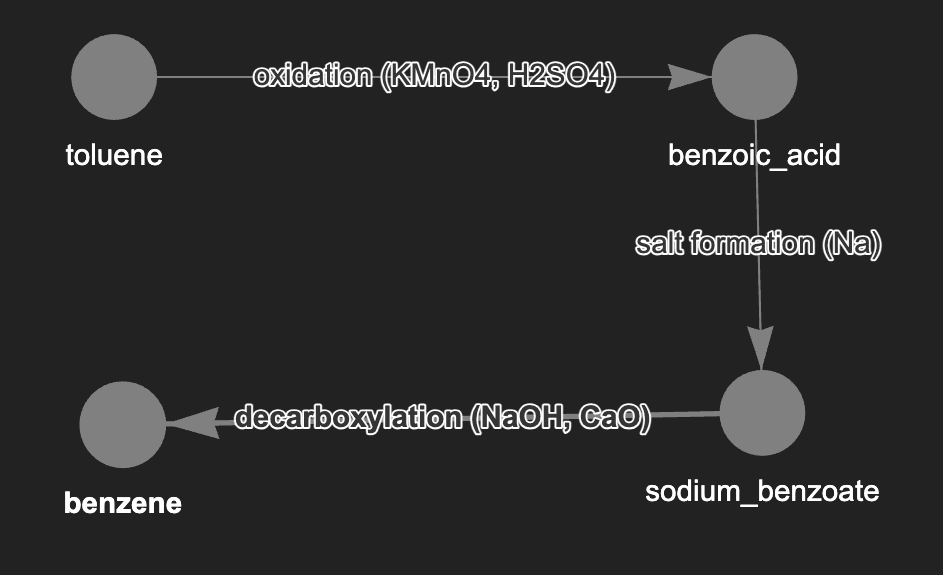

Case Study 2: Pharmaceutical Synthetic Route

With the promising results from our first efforts, we tested the ability of the D2G workflow to capture the information and semantics of synthetic routes. We knew from past projects with pharmaceutical clients that this is a sought after capability in the industry. Here, the added complexity of lower resolution images, chemical structures as object shapes, labeled relationships, and object labels that occur as both object legends and that are encoded in the chemical structure presented challenges that we were eager to explore.

This example led to additional refinements of our instruction set to the LLM chain and tools. The output again demonstrated the ability of D2G to adhere to the synthetic route logic, capture objects and relationships, and include chemical reactions in the edge label as part of the state transitions represented in the route. Up to this point the instructions and refinements remain generalizable to a wide array of diagrams from various industries.

Potential Applications:

- Automated Knowledge Extraction and Management: Graph representation of process can facilitate easy querying, analysis, and visualization of synthetic pathways, helping researchers quickly understand and explore various synthetic strategies without manually interpreting each diagram.

- Enhanced Research and Development (R&D): By analyzing a graph database, researchers can identify novel synthetic pathways, optimize existing ones, and uncover relationships or patterns that were not evident before. This can accelerate the discovery of new drugs by enabling a more efficient exploration of chemical space and synthetic possibilities.

- Collaborative Research: Researchers across different institutions or companies could contribute to and access a centralized knowledge base of synthetic routes. This facilitates collaboration on a global scale, pooling resources and knowledge to tackle complex pharmaceutical challenges.

- Educational Tool: Structured data in a graph database like Neo4j supports interactive learning platforms for chemistry students and professionals. These platforms can dynamically illustrate synthetic pathways, reaction mechanisms, and the decision-making process in designing a synthetic route, enhancing educational outcomes.

- Intellectual Property Management: Graph persistence enables the process of patent analysis and claims validation by providing a structured representation of synthetic routes. This can help identify potential patent infringements or opportunities for novel patent claims by comparing new and existing synthetic pathways.

- Predictive Analysis and Optimization: Integration of structured synthetic routes with predictive analytics tools to forecast the outcomes of untested synthetic pathways, may identify potential bottlenecks, and suggest optimizations. This can significantly reduce the trial-and-error aspect of laboratory work, saving time and resources.

- Supply Chain and Manufacturing Optimization: By understanding the synthetic routes in detail, companies can better plan raw material procurement, anticipate production challenges, and reduce waste.

- Regulatory Compliance and Reporting: Clear, traceable, and detailed documentation of synthetic routes as graph representations can be easily adapted to meet the specific reporting requirements of regulatory agencies.

- Personalized Medicine and Custom Synthesis: The development of personalized medicine by enabling the rapid design and testing of custom synthetic routes tailored to produce small batches of highly specific drugs is enhanced by the ability to employ graph analytics. This could be particularly useful for rare or underserved diseases or individualized therapy plans.

- Cross-Disciplinary Innovation: Capturing synthetic routes encourage cross-disciplinary research by making synthetic chemistry data accessible to researchers from fields like biology, materials science, and computational modeling. This could lead to the development of new materials, bioactive compounds, and computational algorithms for synthetic chemistry.

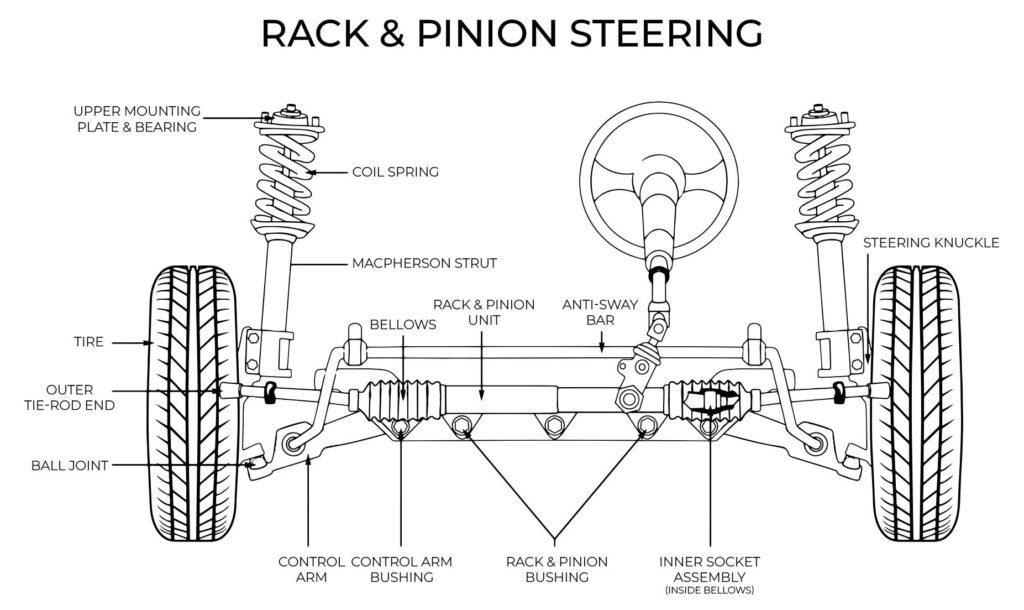

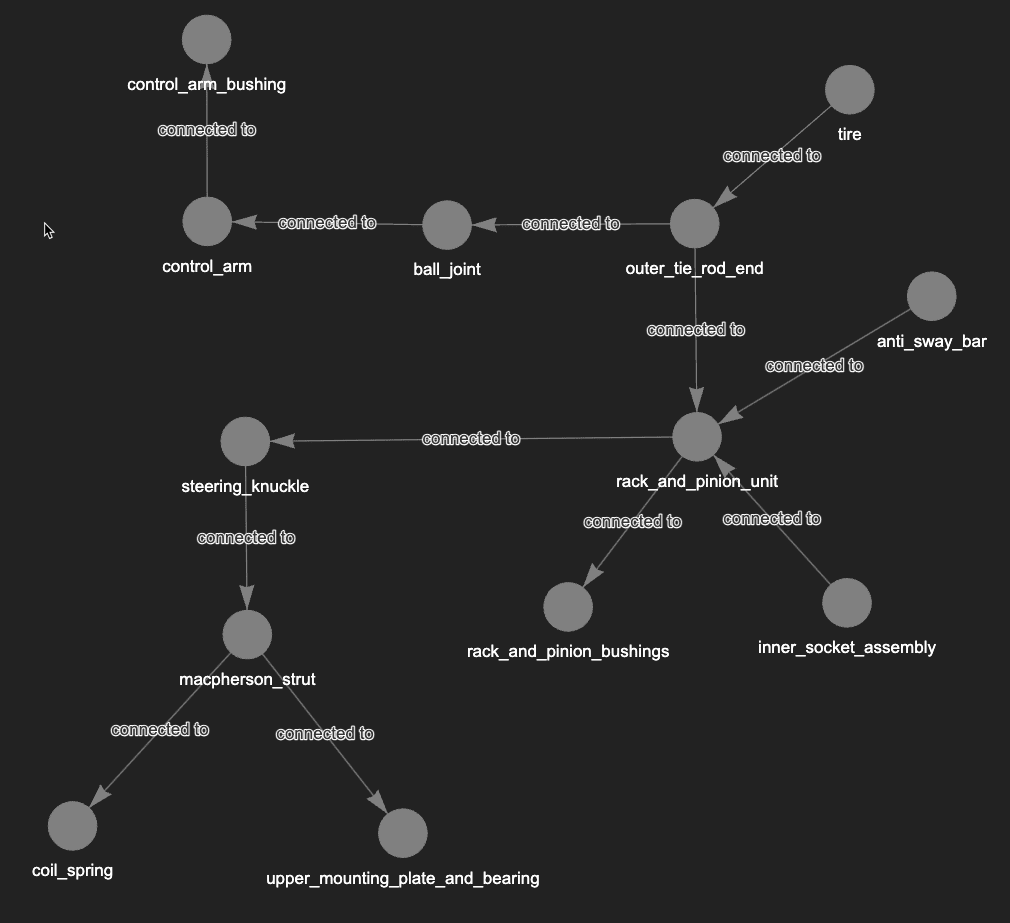

Case Study 3: Automotive Schematics

The next step in our experiments was to understand the ability to progress past logic flows and synthetic routes into schematics and systems. The ability to parse this type of visual information has significant ramifications in predictive maintenance, logistics, network intelligence, supply chains, automation, and other critical business use cases. Here, we provided the D2G workflow with a schematic for a mechanical system in a car. The LLM used by D2G was tasked with identifying, extracting, modeling as a graph, and loading the information from the schematic into a knowledge graph.

The result was largely successful. The graph output captures the entities in the schematic. Some of the relationships demonstrate the choices and challenges made by the vision model in determining connections. For example, the McPherson Strut is connected directly to both the coil spring and the upper mounting plate. At first observation I thought this was an error in the output but with some additional review I can see that a strut does connect to the upper mount via a shaft that travels through the coil spring. Similar to humans, the model’s interpretation is a matter of perspective.

Potential Applications:

- Automated Design Analysis and Optimization: Automatically interpret and analyze mechanical schematics to identify design inefficiencies or potential improvements. Persisting these schematics in a graph database allows for complex queries to identify components that frequently fail or are over-engineered, enabling optimization of design for performance, cost, and reliability.

- Supply Chain and Component Management: Track parts and components across different versions of machines or vehicles by mapping their usage in schematics over time. This can improve inventory management, predict demand for spare parts, and streamline the supply chain by identifying interchangeable parts and minimizing stock levels.

- Maintenance and Troubleshooting: Create an interactive tool for maintenance staff that links mechanical schematics to a graph database of maintenance records, allowing them to query the database for troubleshooting steps, maintenance history, and potential failure points. This can reduce downtime and improve maintenance efficiency by providing quick access to relevant information.

- Collaborative Product Development: Facilitate collaboration between engineers, designers, and other stakeholders by providing a dynamic, structured representation of mechanical schematics. Changes and iterations can be tracked in the graph database, enabling team members to visualize the impact of modifications on the overall design and subsystems.

- Integration with IoT for Predictive Maintenance: Integrate the graph database with IoT sensor data from machinery to predict maintenance needs. By understanding how components are connected and how they function within the system, predictive models can more accurately determine when parts need servicing, reducing unplanned downtime.

- Regulatory Compliance and Safety Analysis: Use the structured representation of mechanical systems to ensure compliance with safety and regulatory standards. The graph database can help map every component to relevant regulations, facilitating audits and safety analysis by highlighting potential non-compliance or risks.

- Enhanced Training and Simulation: Develop advanced training programs and simulations based on a detailed understanding of mechanical systems derived from schematics. This can help engineers and technicians better understand complex systems, improving their ability to design, maintain, and troubleshoot.

- Customization and Configuration Management: Support the customization of products to specific customer requirements by dynamically adjusting schematics and tracking these customizations in the graph database. This enables a more agile response to customer needs while maintaining integrity and control over product configurations.

- Knowledge Sharing and Documentation: Automatically generate comprehensive documentation and knowledge bases from mechanical schematics. By structuring this information in a graph database, companies can create intuitive ways to explore and understand complex mechanical systems, facilitating knowledge sharing across the organization.

- Cross-disciplinary Innovation: Encourage innovation by making mechanical system data accessible to professionals from other disciplines, such as software developers, data scientists, and electrical engineers. This could lead to developing new features, products, or solutions that integrate mechanical systems with cutting-edge technologies in fields like AI, robotics, and digital twin simulations.

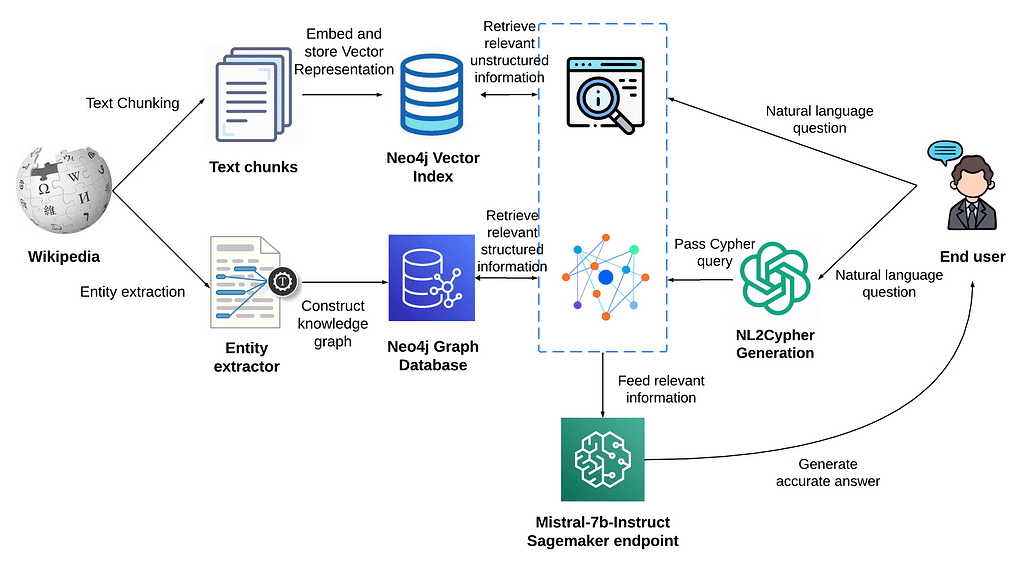

Case Study 4: Architecture Logic Flow

In our final experiment of vision models and implementing them in a custom diagram-to-graph (D2G) application, we decided to have the agents we designed in D2G, using LangChain and LangGraph, examine an architecture for Retrieval Augmented Generation (RAG) developed by Neo4j. The article from Neo4j identifies the enhanced accuracy that can be achieved by grounding LLMs deployed in an RAG pattern with the connectedness of a graph database while leveraging semantic search capabilities powered by Neo4j’s vector indexes. Here, our interest was in how a vision model would work with different shapes in a flow diagram, recursive edges, and containers depicted by the blue dotted rectangle in the diagram. Containers in this context are shapes that represent aggregations or hierarchies of individual entities and themselves represent a higher abstraction of the contained shapes.

The result in this final experiment was consistent with our previous explorations. Some observations I want to highlight are that the model created additional nodes from edge labels that were verbs. This was unexpected and likely configurable in our instruction prompts and tooling. Additionally, the container did present a challenge for the vision model that resulted in a deviation from the diagram. The blue dotted line rectangle representing the retriever logic was interpreted as a triangular logic flow from the End user, to the user’s natural language question, to NL2Cypher Generation, and back to the user. Logically this makes sense that the user asks a question, the question is translated to a cypher query, the query is executed and grounded information is passed as context to the LLM (Mistral-7b-Instruct Sagemaker endpoint), and the accurate answer is returned to the user. The deviation in the resulting graph omits the accurate answer as either a node or edge and misses the intent. The blue dotted box container is the likely culprit in this case. Our ongoing efforts showed promising results in addressing this complexity.

Potential Applications:

- Automated Network Optimization: Automatically interpret network logic flows to identify bottlenecks or inefficiencies within the network infrastructure. By persisting this data in Neo4j, organizations can use graph analytics to optimize network paths, improve load balancing, and ensure high availability and performance.

- Enhanced Cybersecurity Posture: Map the entire network infrastructure in a graph database to visualize and analyze potential attack vectors and vulnerabilities. This comprehensive view enables the proactive identification of weaknesses, better planning of security measures, and quick response to incidents by understanding the relationships and dependencies between network components. Incoming network data can then be linked to the network infrastructure.

- Disaster Recovery Planning: Use the graph database to model application and network dependencies, facilitating the development of more effective disaster recovery plans. Organizations can simulate various failure scenarios to understand the impact on business operations and prioritize recovery efforts based on critical dependencies.

- Compliance and Audit Trails: Automatically document network changes and configurations in the graph database to maintain a historical record for compliance auditing and troubleshooting. This can help in demonstrating compliance with regulations that require detailed logging of network and application changes, as well as providing insights into the evolution of the IT infrastructure over time.

- Configuration Management: Track and manage the configurations of network devices and applications through a graph database. This centralized repository allows for the easy identification of configuration drifts, unauthorized changes, and the impact of changes across the network, improving the stability and reliability of IT services.

- Capacity Planning and Resource Allocation: Analyze the graph database to understand the utilization patterns and interdependencies of network resources. This information can inform capacity planning decisions, ensuring that resources are allocated efficiently to meet current and future demands without over-provisioning.

- Service Catalog and Inventory Management: Create a dynamic service catalog that maps all IT assets, applications, and services, including their interrelations, stored in Neo4j. This facilitates effective inventory management, quick access to service configurations, and easier impact analysis for changes or incidents.

- Incident Response and Root Cause Analysis: Leverage the graph database to improve incident response times and root cause analysis. By having a detailed map of the network and application infrastructure, IT teams can quickly identify affected services, trace the source of issues, and understand the impact of outages or performance degradations.

- Cloud Migration and Multi-Cloud Management: Assist in cloud migration projects by modeling on-premises and cloud environments in a graph database. This helps in planning migration strategies, managing multi-cloud environments, and optimizing cloud resource usage by understanding dependencies and network flows.

- Integration with DevOps and CI/CD Pipelines: Integrate the graph database with DevOps tools and CI/CD pipelines to automatically update the network and application infrastructure model with each deployment. This ensures that the infrastructure documentation is always up to date, facilitating better collaboration between development and operations teams.

Challenges and Solutions

Throughout the course of creating Graphable’s diagram-to-graph (D2G) solution, and integrating LangChain, LangGraph, and graph representations, we were met with expected obstacles and some unexpected challenges. Generally, we knew that the non-deterministic nature of current vision models would result in a variance in the graph representations that would need to be accounted for. The core of these challenges was the models ability to parse and semantically model the entities and their relationships as depicted in an image. While we explored various models like GPT-4-Vision, LLaVA, and others, they appear to share similar limitations. These include images features like rotation or skewing, variations in shapes and lines like we discovered in dotted-lines, image distortions, small text, and poor image quality or resolution.

We addressed some of the above issues with pre-processing of images, image classification, and routing to specialized instruction prompts and tools. Leveraging the capabilities of routing in chains and reasoning in a React framework, both made possible using LangChain features, improved our results and understanding for future solution development. We believe implementing agent and graph reasoning patterns such as Supervisors, Teams, Self-Reflection, Human-in-the-Loop, AutoGPT, and other multi-agent frameworks like crewAI will lead to advances in building novel solutions around vision models.

Future Directions

The pace of advancement in vision models currently progresses at a meteoric pace. Disruptive developments are announced in monthly and sometimes weekly timescales. Google’s Lumiere paper was released in January 2024; the demo was astonishing in showcasing the art of the possible. Then, on February 15th, 2024, OpenAI announced Sora and reset the bar on our understanding about both the pace of progress in vision models and where the future possibilities become staggering. Especially enlightening was the introspective paper that OpenAI released on Sora, which identified existing gaps in their model output, and the thought provoking concept of “Video generation models as world simulators“.

The state of the art (SoTA) in vision model capabilities, emergent phenomena, and applicability to unforeseen use case across a wide set of business domains is exciting. The SoTA is on track to progress in 2024 in ways that will likely exceed our current thinking.

Conclusion

We set out to develop a series of experiments across business use cases we have seen in our efforts with Graphable clients. Our experiments resulted in a D2G application comprised of intelligent agents, chains, and a collection of tools that resulted in insights into applications for many complex business challenges and confidence in developing solutions for existing and future work.

For some use cases the gaps as they exist now preclude D2G from efforts in medical image analysis and where spatial reasoning is critical. However, given the rapid pace of advancement in this field, these limitations may not exist for long.

Overall, we are pleased with the results and excited about what tomorrow may bring!